I have a few idea-bubbles that are always hanging around my head like the Moon around the Earth, and one of them is this: everything is a trade-off. Trade-offs are everywhere; the important ones are all hard in some way - congratulations, you’re a sysadmin or a developer, your job is to make hard decisions. A few days after I wrote about that, Joel Spolsky posted his talk from Business of Software 2009. He’s a great coder, a great writer, and a smokin' public speaker, you should watch it.

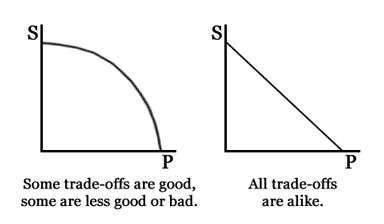

I think that Mr. Spolsky oversimplifies in one aspect of his talk. So I’m going to jump off from there: he says that thinking that you must choose between simplicity and power on a one-dimensional axis is a trap and that you have to construct something else to usefully think about what features software should have. So he talks about elegance and about good features and bad features. I’m going to say it a slightly different way. I just say that you do have to choose between simplicity and power - but it is possible to make good choices and bad choices. Not every choice gives up 1 Simplon of simplicity for 1 Pill of power. There are a lot of choices you can make that trade 2 Simplons for 4 Pills, or sometimes 1 Pill for 87 Simplons. You’d much rather make those than 1:1 trades, and you should spend a lot of time avoiding trades where you give up 1 Simplon and gain 0.005 Pills.

Too often, we act like the curve on the right is what we’re stuck with - the best we can do is those 1:1 trades. It should be obvious, though, that we can do better. The problem is that to do better, generally we need to make those hard decisions. Achieving trade-offs that are better than 1:1 is hard, and it’s easy to construct (purposefully or not) incentive structures that absolutely destroy the chances of making good trade-offs. My favorite example of this is in airline security - we’re trading security and convenience instead of simplicity and power, but the two problems map onto one another extremely well. The Transportation Security Administration is living proof that 1:1 tradeoffs aren’t the only kind - they demonstrate, every day, that you can make far worse trade-offs. They’re the pathologically bad case, where you make so many trade-offs that are so bad that you with up far less security and far less convenience.

But why is that? It’s not because the TSA is filled with evil moustache-twirling types who want travellers to be inconvenienced. I believe that the TSA is full of perfectly average humans who act according to their incentives, overseen and directed by perfectly average humans (who happen to be politicians) acting according to their incentives. Those incentives produce the pathology, because they’re not “produce better security” incentives, they’re “avoid punishment” incentives. There are few rewards for actually improving security, and tremendous punishments for letting people think security has been degraded — and because we laypeople assess security pretty badly, a perverse cycle of incentives produces pretty bad security.

This maps directly onto producing software and wrangling production servers: even though it is harder, we need to seek out those better trade-offs, and that means both difficult work and setting up good incentive systems. That also means that the managerial professions need to be very careful about the incentive structures that they set up - the intent of an incentive structure is almost never the only outcome that that structure can produce. When you’re creating systems, if you refuse to take responsibility for better trade-offs, bad ones will creep in. One of my favorite examples in software is stealing focus - when the window that you thought you were working in gets shoved behind something else that decides that it needs your attention. Stealing focus is, I’m convinced, the sort of sin that means you should never be allowed to touch a compiler again, and furthermore you should probably lose your right pinky, the one that you tap Enter with. Every time I’ve seen a window stealing focus, it’s been at the intersection of the worst programmer habits - hubris in thinking that your program, right now, needs to be the most important thing in my computing world, and bad laziness in that the program is always asking me to make some decision that I don’t actually need to make right now. Of course, the existence of this capability also means that it can be used for malicious purposes in a nasty trifecta of bad UX. Far better to use alternate notification methods (the Notification Area nee system tray in Windows, and bouncing Dock icons or Growl messages in OS X) to convey such information.

Like the security/convenience example, this doesn’t happen because we programmers are finger-steepling connivers of inconvenience. Rather, it happens because too often our incentive structure is disconnected from what actually happens to users of our software. This is part of why startups are a good thing to have in the world: by necessity, a startup deals much more closely with its customers, and developers routinely talk to people who actually use the software.

So the challenge to us is to recognize good trade-offs, and work to make those trade-offs instead of 1:1 or sub-par tradeoffs. This involves making difficult decisions for your users - and it involves respecting that they’re busy making their own difficult decisions, and getting out of their way.